Generative AI (Gen AI) has taken off like a rocket in just a few short years, transitioning from the realm of cutting-edge research to large-scale commercialization and widespread consumer engagement. As the influence of GenAI spreads, a growing number of businesses are realizing that conversational AI applications—such as chatbots that fuse large language models (LLM) with their organization’s data—can help them use the power of AI to multiply the value of that private in-house data.

Because GenAI is resource-intensive, the process of building a successful LLM-based in-house chatbot begins with selecting the right hardware. The server solution you choose to serve as the foundation for your LLM must support the number of simultaneous users you expect while maintaining response times within an acceptable threshold. The value of the augmented LLM increases as more and more users access it as a hassle-free way to quickly gather accurate information. One way to get started is to buy more powerful server hardware, but balancing acquisition and maintenance expenses with potential benefits is a challenge that most businesses face. Objective server performance data that reflects real-world scenarios can help you build a successful in-house LLM initiative from the bottom up.

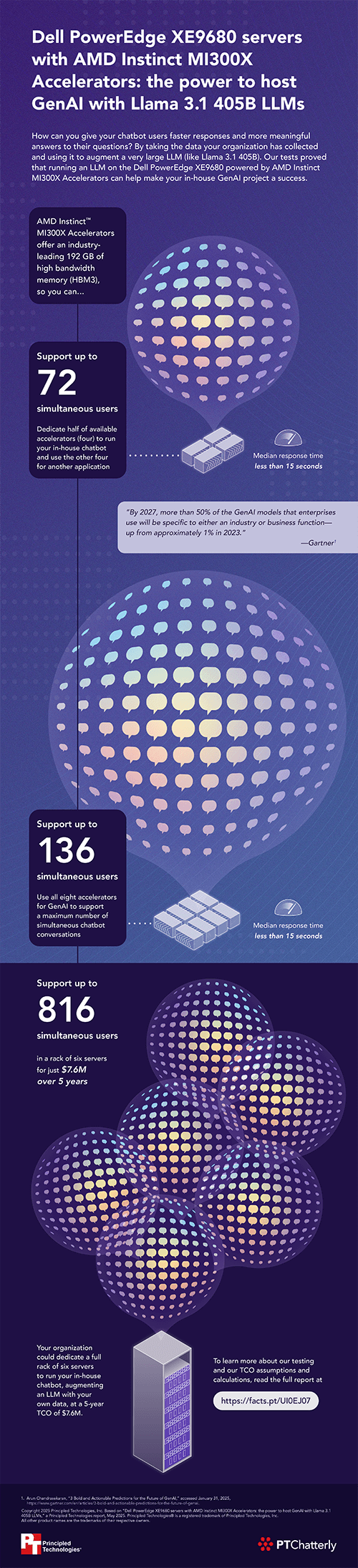

To help you plan for your GenAI server needs, we used the PTChatterly chatbot testing service to assess the performance of two configurations of a Dell PowerEdge XE9680 server equipped with eight AMD Instinct MI300X Accelerators. In the first configuration, we allocated four accelerators for our LLM workload, leaving the other four accelerators free for other workloads, as some organizations might choose to do. In the second configuration, we tested the LLM workload while utilizing all eight accelerators. To help explain how much a GenAI project might cost, we also calculated the expected five-year TCO costs of the XE9680 solution.

We found that both configurations supported substantial numbers of chatbot users. With four accelerators, the XE9680 supported 72 simultaneous users within the response time threshold—while leaving room for other workloads. That performance scaled well with the eight-accelerator use case, which supported 136 simultaneous users within the response time threshold. In addition, our research showed that with a rack of six XE9680 servers equipped like those in our tests, an in-house chatbot could support up to 816 simultaneous users for a total cost of $7.6M over five years.

If you are investigating how to fuse your data with a large, high-precision LLM, Dell PowerEdge XE9680 servers equipped with AMD Instinct MI300X Accelerators can provide the power and capacity you need to support a successful venture.

To read more about our in-house augmented LLM server performance tests and TCO calculations, check out the report and infographic below.

Principled Technologies is more than a name: Those two words power all we do. Our principles are our north star, determining the way we work with you, treat our staff, and run our business. And in every area, technologies drive our business, inspire us to innovate, and remind us that new approaches are always possible.